Byte #3 - If Clean Code Had a Prompting Twin!

Welcome back to HosseiNotes - your weekly Byte of simplified AI engineering, with a side of fun! 🚀 😅

So far, we’ve talked about how to write better prompts and give your AI some identity. Now it’s time to zoom out a bit and ask:

🧠 Where does prompt engineering actually fit in real-world AI systems?

Because here’s the thing:

🤖 Prompt engineering isn’t a hack. It’s architecture.

It’s become a foundational layer in how we build, scale, and maintain AI products.

🧠 Prompting = Instruction Protocol

In modern LLM-based systems, your prompt is the instruction protocol - the contract between your system and the model.

It defines:

What the model should do (and not do) 🔧

How it interprets context 🧠

How structured the output is (or isn’t) 📦

Whether it saves the day or hallucinates a completely new database schema 🦄

And when that protocol is clean, explicit, and well-structured… Your AI actually behaves like part of your system - not a creative writer who wandered in from a coffee shop. ☕📜

👨💻 Flashback: Clean Code vs “It Works on My Machine”

You remember Clean Code, right?

The way it separated developers into two species 😅:

One writes maintainable, reusable abstractions so clean it feels like Da Vinci himself took up software engineering. 🧑🎨

The other duct tapes a solution that breaks the second you add a new button 😬

Now apply that to prompting.

I’ve seen it in real projects - prompts that grew into giant spaghetti monsters, bolted together with last-minute edge cases ! Yeah. Not fun when you’re trying to add a new feature or reuse that prompt elsewhere. 😢

Just like clean code scales, clean prompts scale.

You can chain them across flows

You can reuse them across tools

You can reason about what changed when something breaks

And just like with bad code, when you try to patch a dirty prompt, the hallucinations start flying. 🦄

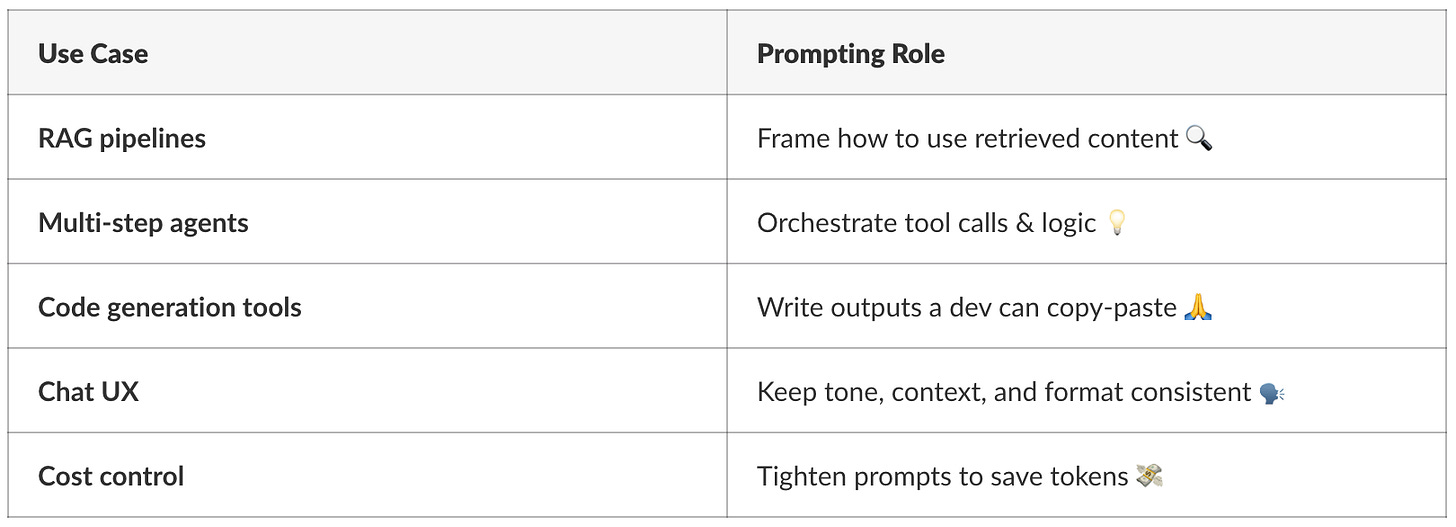

💡 Where Prompt Engineering Shows Up in Real Systems:

Your prompt isn’t “just a prompt.” It’s a behavioral system.

🎯 Prompting Is an Art - and an Engineering Discipline

Writing good prompts isn’t about stuffing in more instructions.

It's about clear thinking, intentional sequencing, and anticipating failure - just like writing production-grade code.

Prompting is not “talking to AI”

It is the art of designing the behavior of a probabilistic system - and getting it to act like a reliable teammate.

You don’t need to be a prompt guru.

But you do need to stop treating your prompts like chat messages. 😅

🎁 Want to Learn This Properly?

You can get really far with trial and error.

But if you want to build real prompting intuition, these are worth your time:

🧪 Prompt Engineering Course (by DeepLearning.AI in collaboration with OpenAI) – solid beginner foundations

🧠 Prompt Engineering Guide (dair-ai) – deep dive, curated

📚 LearnPrompting.org – practical, community-built guide to structured prompting

🛣️ Prompt Engineering Roadmap – great visual overview for devs

🛠️ OpenAI Cookbook – practical, high-signal examples

🧵 Me - HosseiNotes 😉 (you’re already here)

🛠️ TL;DR

Prompt engineering is now a core system design skill - stop treating it like you’re typing into Google 🙏

Clean prompts = better scale, lower cost, fewer bugs

Write prompts like you write production code - with clarity, structure, and purpose

This is the cheapest optimization you can make - and probably the most painful one to ignore

Until then:

Write prompts like you write software - with intent, not improv. 🧱💻

🚀 See you next week with our new byte! 😎